Most of what has been posted on this site in the past has been focused on getting new guest posts published, either in broad strokes or with an emphasis on specific sites. What about old posts, though? Those old guest posts you built years ago. Are they still good? Are they still valuable? Or could they be stabbing you in the back, in a way you don’t notice, precisely because they’ve been there for years?

Years and years ago, guest posts were a lot different than they are now. It used to be a world where you could send out one post to ten different blogs, get five of them to publish it, and rake in the rewards. It didn’t matter that the posts were the same, that the links all had the same anchor text, or anything else.

In 2011, Google’s Panda algorithm update made it dangerous to have duplicate content on your site. Many sites accused of duplicating content removed it, but plenty of sites left their old content lying around, under the assumption that it didn’t matter. After all, who cares about a four year old zero-traffic blog post, anyway?

In 2014, Matt Cutts published his famous post about the death of guest blogging, which temporarily hurt the technique as a whole and cut off a lot of the spammier methods of guest blogging, but as we all know, guest posting never truly died. Only spammy guest posting died.

The question then, and the question now, is whether or not those old guest posts are hurting you. When you published a bunch of identical guest posts back in 2010, are those links hurting you?

I’m going to cover guest blogging from both perspectives; the poster and the host. Both can potentially be holding your site back, like weights on a runner.

The Posts You Wrote

First up, let’s talk about the posts you wrote, perhaps years ago, perhaps longer. Any guest post you create for another site has the potential to have turned sour over the years.

Let’s get one thing out of the way right up front. If you’ve only recently started guest posting, chances are your old links are going to be fine. If you’ve been focusing entirely on high quality content, natural links, and organic relationships, you’re probably fine.

Barry Schwartz, one of the foremost authorities on SEO, wrote about this topic back in 2014 in response to the Cutts blog post. In his post, he states up front that if you’re considering disavowing a link, you probably should. “Better to be safe than sorry”, after all, right?

Personally, I feel that this approach is a great way to neuter a site and undo years worth of growth. Sure, you will probably disavow some bad links, but you may also disavow good links with such a broad-spectrum strategy.

What you need to do is perform a backlink audit. If you happen to have a complete record of every guest post backlink you’ve ever accumulated, you can use that as a starting point, but honestly, performing a full site link audit once every year or two is generally a good idea. Google’s standards are always changing, sites can change and rearrange at any time, and you never know what is and isn’t worth caring about.

To perform a link audit, you need to do three things. The first is harvest as complete a picture of your links as possible. The second is judge the links for their value. The third is dealing with the links you don’t think are worth keeping around.

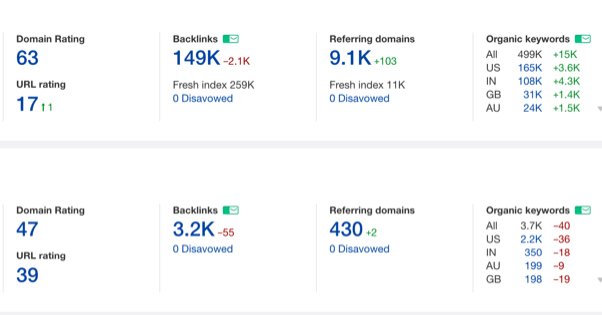

The first thing you need to do, then, is start harvesting your backlinks. I recommend pulling from as many sources as you can reasonably use. Different link monitoring tools will find different sets of backlinks. One tool will have a different index from another. This would all be a lot easier if you could request a total index report from Google, but they don’t offer such a service, and with good reason. You can bet it would be heavily abused. Anyway, here are a few good tools you can use:

- Google Analytics. Your own site analytics will show you a decent selection of the links pointing into your site, or at least the ones that have modern relevance.

- SEO SpyGlass. This tool will pull link information from a few different sources, including their own index and the Google search console.

- Majestic SEO. Majestic is one of the foremost link analysis tools and has one of the most comprehensive indexes outside of Google’s.

- Moz’s Link Explorer. Moz is another high-end link monitoring tool and can give you a lot of useful information.

Pull as complete a link report as you can from each of these tools. Add all of the links they find to a spreadsheet with columns for the linking page, the linking root domain, the anchor text, and room for other metrics we’ll discuss in step two. Remove duplicates, of which there will be a lot, because these tools have plenty of overlap.

Next, you want to harvest data about your backlinks. I prefer to use Screaming Frog for a lot of this. Their scraper is an incredibly robust program and can get you a ton of useful information.

The first piece of information you want to add to your spreadsheet is whether or not the link is dofollow or nofollow. Nofollow links are meaningless for SEO, from a mechanical standpoint. There may be some minor value from the implicit mention, and they can still pass user traffic, but they don’t pass link juice, good or bad. This means you can go through your list of links and remove anything that’s nofollowed from consideration. It doesn’t matter if you disavow them or not, it will change nothing. Remember, disavowing links basically just means artificially adding them to a nofollow list.

Look for any links that point to your page and that lead to a 404 or other error page. Broken links aren’t doing you any good, and they may be opportunities to build better links from those sources. They might also be an indication of a failed redirect on your site, or a broken page that shouldn’t be broken.

Next, look for anchor text that looks, well, unnatural. If you have a ton of links with the same anchor text, or links with anchors that aren’t relevant to your niche, or anything that looks out of the ordinary, flag those links for further investigation.

At this point you need to go through the remaining links and pull out any that look like they might be less than useful. Links from sites that are known to be parts of PBNs, links that look like paid links but aren’t disclosed, links that look like they come from scraped versions of your content, and so on. Some tools, like SEO SpyGlass, will give you a “penalty risk” analysis. These aren’t 100% reliable, but they can give you an idea of where to start.

Build up a list of any link you feel isn’t worth keeping, or that might be putting your site at risk, and take action.

Now, Google allows you to use the Disavow Links Tool, but they prefer if you attempt to have the links removed in other ways first. Send a message to the site owner and ask for them to remove the link first. If you’re not able to get the links remove, then you can resort to the disavow tool.

The Posts You Host

The other way guest posts from years ago might be holding you back is when your site published those guest posts. Now, again, if you’ve been upholding high quality standards when you accept guest publications, you probably won’t have a problem.

What you want to do here is a content audit. You need to scan your site for pages that fail to meet minimum levels of quality for one reason or another. Maybe they’re old and haven’t been updated for the times. Maybe they’re accidentally copied. You never know what might have happened in the last half a decade or more, so it’s worth scanning your site and keeping track of how everything looks.

Remember, old pages with thin content on them might not be bringing in users, but that doesn’t mean they’re not relevant. If you have 500 old blog posts that fail to meet minimum quality guidelines, it doesn’t matter if they were published years ago; they’re holding you back.

Screaming Frog will be your top tier tool for this. It’s a spider designed for auditing your own site, rather than backlinks; the use up above is more of a hack than anything. Turning it loose on your own site is going to get the full power out of it.

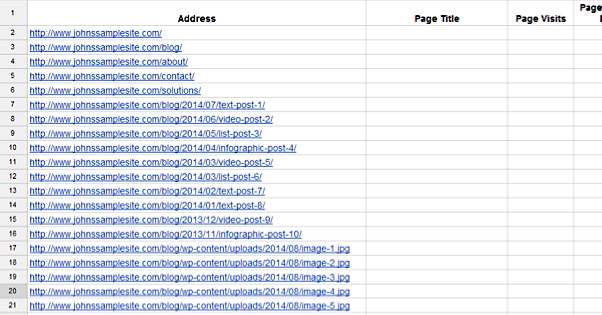

Set Screaming Frog loose on your site, and build a list of all of the pages on your site it can see. Then go to your sitemap and Google’s Search Console to get their versions of your site as well. Screaming Frog alone might not get every page. Build up a spreadsheet, same as you did before. Remove duplicates and so on.

Next, harvest your relevant metrics. Screaming Frog gets a lot of data, but you may want to use something like URL Profiler as well. Harvest information like the Moz URL-level data, the robots access, the HTTP Status of the page, and various important Google metrics like whether or not it’s indexed, what its pagespeed rank is, and whether it’s mobile friendly.

Also consider running your site through a full check with something like Copyscape. This can help tell you if any old posts are actually duplicates. It’s entirely possible an old writer or old guest poster of yours duplicated the content from elsewhere. It’s also possible your content has been scraped and republished; when you find these cases, you can take action to get the stolen content removed.

Now you can sort by various pieces of data and see what actions you need to take. Here are some suggestions:

- Anything with an HTTP status code other than 200 OK should be examined. Broken pages, redirects, and other status codes may need to be fixed.

- Anything with no title or an exceptionally long title might be worth fixing for the SEO benefits a meta title provides. The same goes for description.

- Anything with meta keywords specified should have them removed. Use of the keywords field is pretty much exclusively limited to spammers at this point.

- Anything that isn’t indexed but should be, should be examined to see if you need to remove a robots directive.

- Anything that’s indexed but shouldn’t be, should be examined to see if it needs to be hidden.

- Anything that’s not indexed and shouldn’t be indexed can be removed from the spreadsheet; it’s irrelevant to the audit.

- Anything with external links should have those links scanned to see if they’re broken or direct to a page you don’t want to support. Determine if you should nofollow the links.

- Anything with a low speed ranking should be audited to find out why. Anything that is not mobile friendly should be made mobile friendly.

Categorize each page by the action you want to take on it, whether you think it should be deleted, or if it’s fine as it is, or if it can be improved. There are a lot of possible actions you can take, from merging pages to updating them to removing them entirely, and figuring out which is which depends on whether or not the page is actively hurting you, is irrelevant, or is perfectly fine as it is.

Once you’re done, you’ll see some fluctuations in your search rankings as Google figures out what you did, but you should be settling higher than you were before. Good job!

ContentPowered.com

ContentPowered.com